Why Your Firm Needs a Point Clouds Library, Not Just a Folder of Scans

For any firm deep in renovations, as-builts, and adaptive reuse, a point clouds library is more than just storage. It’s a centralized system that transforms raw scan data from a chaotic folder of files into a strategic, reusable asset that drives accuracy, predictability, and margin protection into every project.

From Data Chaos to a Strategic Asset

Let's be honest. For too many architects, builders, and surveyors, managing point cloud data is a constant headache. Project folders become a dumping ground for scattered scan files, riddled with inconsistent naming, unclear coordinate systems, and massive, uncompressed datasets.

Modelers waste billable hours searching for the right scan. Preventing RFIs and protecting margins feels like a losing battle. This disorganization isn't just an IT problem—it's a direct threat to your operational consistency and profitability.

This chaos inevitably leads to redundant site visits, modeling based on risky assumptions, and an unpredictable Scan-to-BIM workflow. The core issue is treating raw data as a disposable byproduct of a single project instead of a long-term firm asset.

The Shift to Production Maturity

Firms that operate with production maturity do things differently. They build and maintain a disciplined point clouds library—a system designed for clarity and reliable delivery, not just storage. This is far more than a tidy folder structure; it's a core operational system that makes reality data findable, trustworthy, and instantly usable.

We’ve seen teams cut Scan-to-BIM prep time significantly when their point clouds were standardized, indexed, and aligned to a single coordinate strategy.

By establishing clear standards for organizing point cloud data, these firms achieve outcomes that directly protect the bottom line:

- Accelerated Modeling: Teams get instant access to correct, registered data, which cuts hours of prep time before modeling even begins.

- Improved Accuracy: A single source of truth for existing conditions eliminates guesswork and dramatically reduces errors in as-built modeling.

- Enhanced Reusability: Data from past projects becomes a valuable resource for future renovations or feasibility studies on the same site, preventing redundant site visits.

- Predictable Turnaround Times: A structured Scan-to-BIM library creates operational consistency, which is the foundation for scalable delivery pods and reliable project timelines.

Building Your Data Foundation

A well-managed library is the bedrock of efficient BIM documentation and clean coordination. With strong indexing, naming standards, metadata tagging, and a clear storage structure, teams can find reality data instantly. This strategic approach turns your point cloud data from a project liability into a powerful asset that supports decision checkpoints and permitting prep across a building's lifecycle.

The Four Pillars of a Production-Ready Library

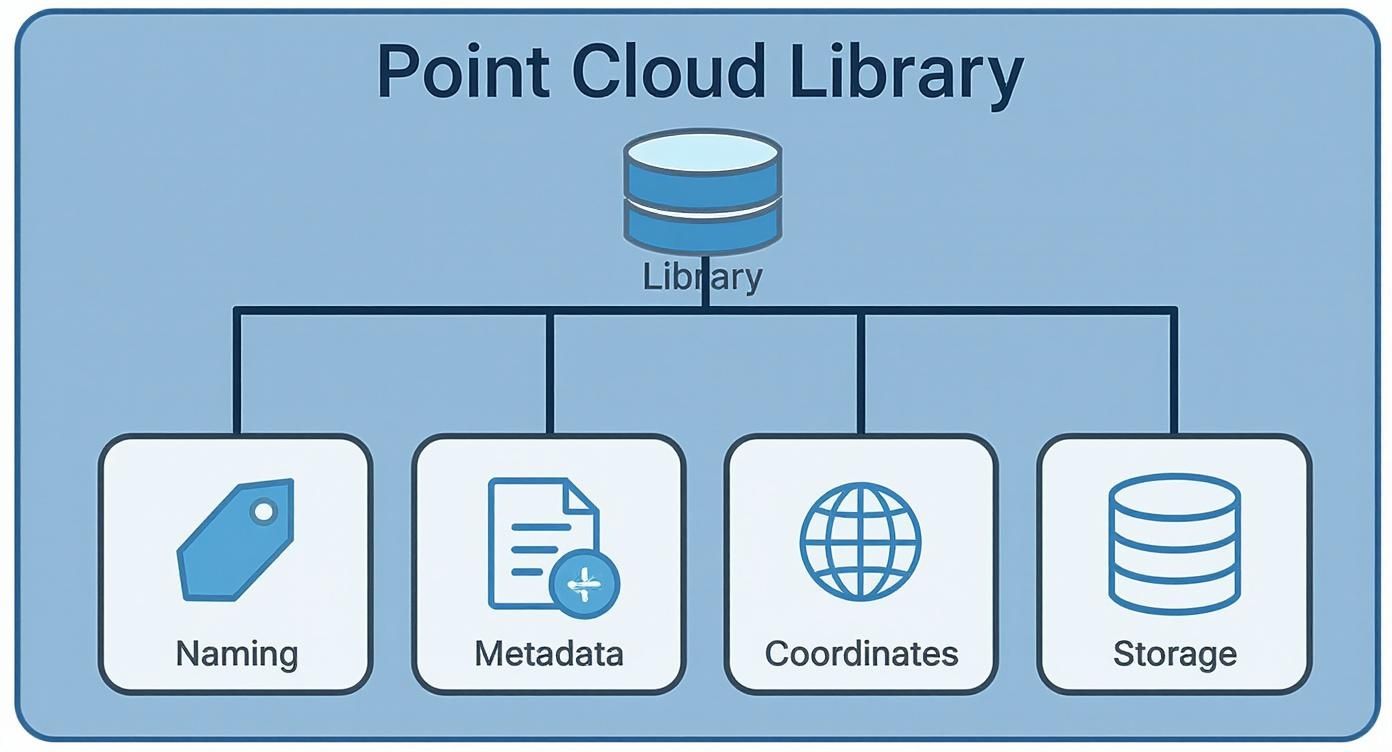

Building a truly functional point clouds library isn’t about creating another folder on the server. It’s about implementing a disciplined framework that turns raw, chaotic data into a reliable, findable asset. A production-ready system boils down to four essential pillars: consistent naming, rich metadata, strict coordinate system discipline, and a logical storage structure.

If any one of these pillars is weak, you guarantee confusion, rework, and wasted hours. This framework isn't about rigid rules for their own sake; it’s about creating the operational consistency that protects your margins and enables predictable delivery. When every scan is findable, verifiable, and usable, your teams model faster with fewer assumptions.

Pillar 1: Consistent Naming Standards

The fastest way to lose control of your data is with inconsistent file naming. Files named Scan_001_Final.rcp or Building-A-Exterior.e57 create ambiguity and force your modelers to waste time opening file after file to find the right one.

A strong naming convention makes your data instantly identifiable. Your standard should be logical and pack in key identifiers. A field-tested approach includes:

- Project ID: A unique code for the project (e.g.,

24-015). - Date of Capture: The day the scan occurred (e.g.,

20240822). - Location/Area: A clear descriptor of what was scanned (e.g.,

L01-LobbyorEXT-North-Facade). - Data Type: Is it raw or processed? (e.g.,

RAWorPROC). - Version Number: A simple way to track updates (e.g.,

v01).

A filename like 24-015_20240822_L01-Lobby_PROC_v01.rcp tells your team exactly what they're looking at. This simple template discipline prevents the costly mistake of modeling from an outdated scan.

Pillar 2: Rich Metadata Tagging

While a good name identifies the file, metadata gives it context. This is what turns your library from a collection of files into a searchable, intelligent database. It’s where you store crucial details needed to know if a point cloud is suitable for a task.

Think of metadata as the digital logbook for each scan. It’s the institutional knowledge that prevents you from having to re-verify or re-process data every time a new team member needs it.

You absolutely need to be tagging these essentials:

- Scan Technician: Who was on-site running the scanner?

- Scanner/Sensor Type: What gear was used? (Leica RTC360, NavVis VLX, etc.).

- Coordinate System: The project’s defined coordinate system (e.g., State Plane, Local).

- Registration Accuracy: The final RMS error from your registration software.

- Processing Notes: Any specific details on cleaning, classification, or alignment.

This information is gold for your QA processes and ensures anyone using the data understands its origins and limitations.

Pillar 3: Strict Coordinate System Discipline

A unified coordinate system is non-negotiable. It's the single most important factor in preventing the alignment headaches that plague Scan-to-BIM workflows. When different scan datasets use conflicting coordinates, your team wastes countless hours trying to manually place them in Revit or Recap—a process that introduces a huge risk of error.

The rule is simple: establish one documented coordinate system per project and stick to it.

All scan data, whether from terrestrial scanners, drones, or mobile mappers, must be registered and exported to this single source of truth. This discipline ensures every point cloud snaps into your design software in the correct location, ready for immediate as-built modeling.

Pillar 4: A Logical Storage Structure

Finally, a scalable folder structure ties it all together. A well-designed structure guides users to the correct data and prevents files from getting lost. This predictability is mission-critical for distributed teams. The goal is to make finding the right file intuitive, not a treasure hunt.

A common and highly effective structure separates raw data from production-ready files:

[Project_ID]/01_Raw_Scans/: Holds the untouched, proprietary scan files straight from the scanner. This is your archival source of truth.02_Registration/: This is where registration project files live (e.g., a Recap or CloudCompare project).03_Production_Clouds/: Here are the final, cleaned, and registered point clouds (e.g.,.rcp,.e57) ready for use in design software like Civil 3D.04_Documentation/: This folder contains registration reports, site photos, and control point data.

This separation ensures modelers always pull from the "Production" folder, preventing the accidental use of unprocessed data. This clarity is fundamental to a reliable Scan-to-BIM library and the backbone of consistent project delivery.

A well-organized point cloud library isn't just a "nice-to-have." It's a strategic asset built on four foundational pillars. Each plays a critical role in turning raw data into a reliable resource that saves time, reduces errors, and ultimately protects your bottom line.

Pillar Breakdown for a Strategic Point Clouds Library

| Pillar | Core Components | Key Outcome |

|---|---|---|

| 1. Naming Standards | Project ID, Date, Location, Data Type, Version | Files are instantly identifiable and searchable, preventing confusion and misuse. |

| 2. Metadata Tagging | Technician, Scanner, Coordinate System, Accuracy, Notes | The library becomes a searchable database with full context for quality assurance. |

| 3. Coordinate System | One documented system for all project data | All scans align perfectly in design software, eliminating hours of manual adjustments. |

| 4. Storage Structure | Segregated folders for Raw, Registration, and Production files | Teams can intuitively find the correct data, ensuring they always work with verified files. |

By treating these four pillars as non-negotiable requirements, you build a system that scales with your firm, empowers your team, and delivers consistent results.

Integrating Your Library into BIM and CAD Workflows

A disciplined point clouds library is only strategic when it plugs directly into the software your teams use every day. The real value emerges when survey, architecture, and engineering teams can pull accurate reality data into their workflows without friction.

This means seamless integration with essential tools like Autodesk Revit, Civil 3D, and Recap. When your library is built on standardized naming, metadata, and coordinate systems, linking or importing point clouds becomes radically simpler. You eliminate the common, frustrating errors that come from using the wrong file or fighting with misaligned coordinates.

Streamlining Data Access for Project Teams

The days of shipping hard drives or waiting hours for massive files to transfer via FTP are over. Modern point cloud management uses cloud platforms to give project teams direct, permission-controlled access to the data they need, right when they need it. This shift is a core component of scalable delivery.

This move is powered by the explosive growth of cloud computing, a market valued at USD 676.29 billion in 2024 and projected to hit USD 2,291.59 billion by 2032. This industry-wide shift toward centralized, accessible data systems not only solves file sharing logistics but also tightens security and version control, ensuring modelers always use the most current, approved dataset.

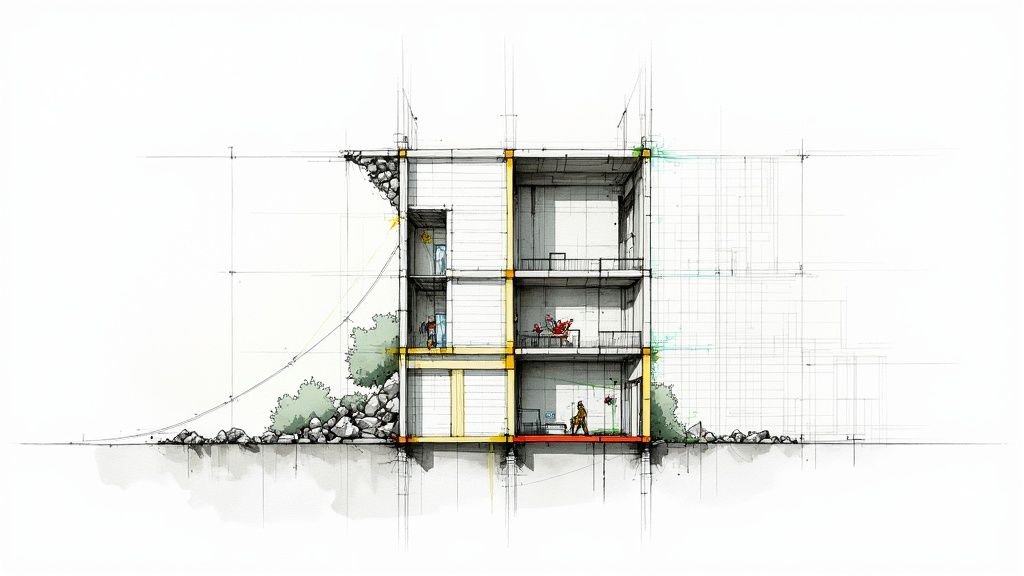

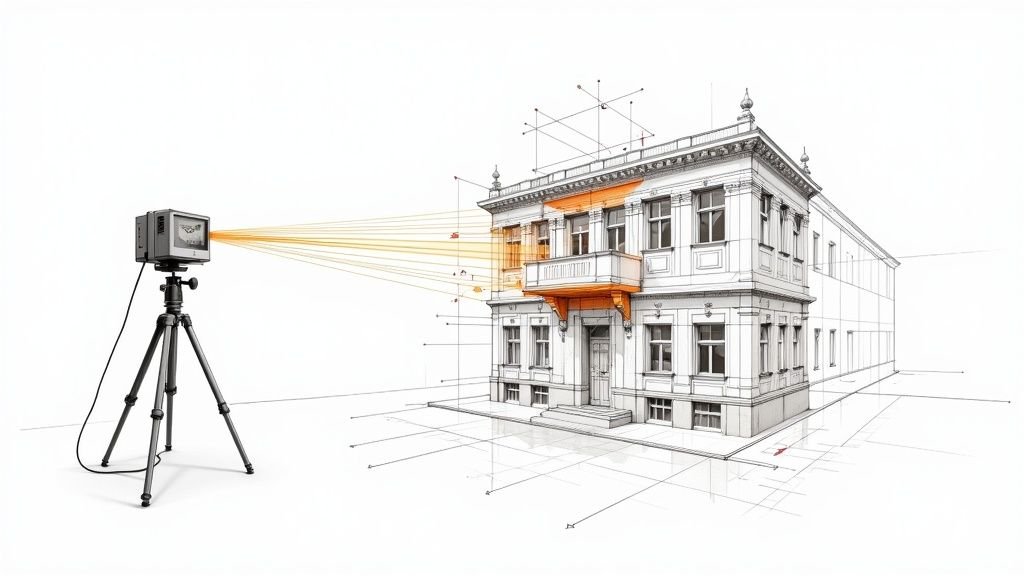

This infographic shows the fundamental components that make a point cloud library a structured, reliable asset.

Each element—from naming conventions to storage structure—plays a vital role in making the data instantly usable within your BIM and CAD environments.

From Raw Scans to a Reliable Modeling Foundation

A well-organized Scan-to-BIM library acts as a reliable bridge between field conditions and the digital model. When a BIM manager or architect needs to begin as-built modeling, they should be able to find and link the correct point cloud in minutes, not hours. The library's structure and metadata provide confidence that the data is accurate, correctly geolocated, and ready for production.

This isn't just about saving a few hours; it's about building predictability into your entire production pipeline. It stops the cycle of rework caused by bad data and lets your highly skilled modelers focus on modeling, not data forensics.

This operational consistency is what protects your margins, especially in complex renovation or adaptive reuse projects where understanding existing conditions is paramount.

For teams looking to refine this process, our guide on how to convert point cloud data to BIM models offers deeper insights into the workflow.

By focusing on clean integration, you transform your point cloud data from a cumbersome project deliverable into a dynamic, accessible asset. This system improves coordination between survey and design teams, providing a trustworthy foundation for all subsequent modeling and BIM documentation. It’s the difference between fighting your data and making it work for you.

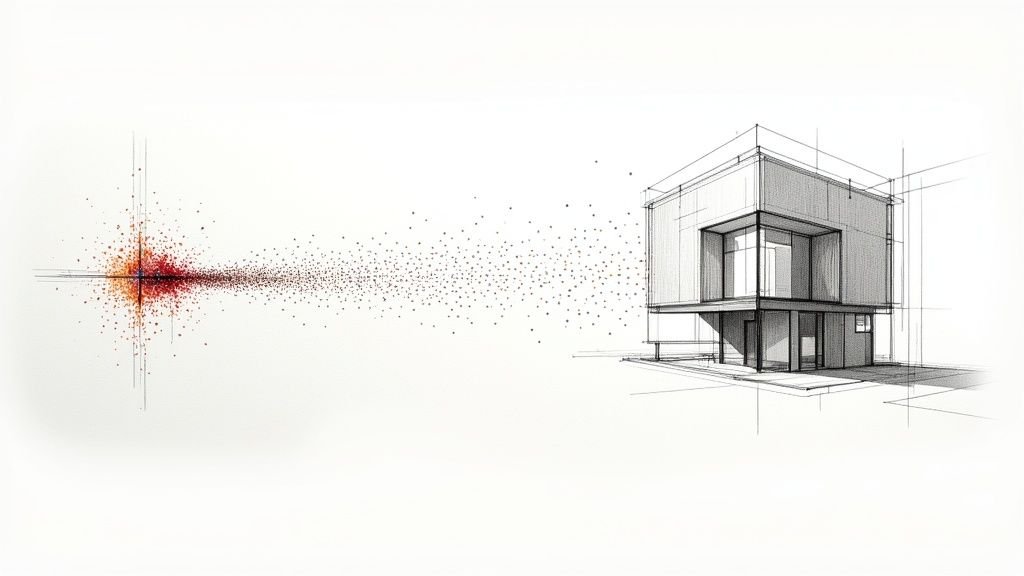

Turning Raw Scans Into Reliable Data

Here’s a hard truth: raw scan data is not library-ready data. A truly reliable point clouds library is built on disciplined processing and uncompromising quality assurance. If you skip this, you’re just archiving future headaches for your team.

This process kicks off the second that data gets back from the field. Every scan must move through a methodical workflow: registration, cleaning, and indexing. This isn't a "nice-to-have" step—it's the bedrock of a trustworthy data asset that will accelerate modeling and prevent expensive mistakes before they happen.

Registration Accuracy Is Non-Negotiable

The first and most critical decision checkpoint is registration. This is where you align multiple scans into one cohesive point cloud. The goal isn’t just to make it "look right"—it’s about hitting a measurable level of accuracy.

Your team absolutely must:

- Achieve Tight Tolerances: Aim for a low Root Mean Square (RMS) error, typically within a few millimeters, depending on project demands.

- Document Everything: The registration report isn't a formality. It’s a crucial piece of documentation that proves the cloud’s accuracy and must be saved as a QA record.

- Verify Control: All scans have to be locked to the project’s established control network and coordinate system. Any deviation here will throw everything off.

There’s no room for guesswork. A methodical approach here ensures the geometric foundation of your as-built model is rock solid.

Cleaning and Classifying for Usability

Once registered, a point cloud is often cluttered with noise from reflective surfaces, temporary construction materials, or people who walked through the scan. A raw, messy cloud is a nightmare for modelers. The whole point of organizing point cloud data is to make it as lightweight and genuinely useful as possible.

A clean, classified point cloud is a sign of a mature production workflow. It shows a commitment to providing modelers with data that is immediately usable, not a digital puzzle they have to solve.

Effective management involves segmenting the data to improve performance and clarity. For example, separating MEP systems from architectural elements allows modelers to turn off what they don’t need. Our guide offers hands-on techniques on how to clean point cloud data for accurate models, a must-have skill for any serious Scan-to-BIM team.

Establishing Rigorous QA Checkpoints

Before any dataset is added to your Scan-to-BIM library, it needs to pass a final, rigorous quality check. This is the gatekeeper that stops bad data from contaminating your library. It’s not a quick visual scan; it’s a structured verification process.

Your QA checklist should confirm:

- Coordinate System Adherence: Is the cloud in the correct, documented project coordinate system?

- Accuracy Verification: Does the registration report meet the project's tolerance requirements?

- Data Completeness: Are there any glaring gaps or missing areas in scan coverage?

- Naming and Metadata: Does the file follow your team's naming convention, and is all metadata correctly tagged?

To keep your point cloud library trustworthy, you have to enforce high data quality standards from the start. Knowing how to solve data integrity problems is fundamental to maintaining a system your teams can depend on. By putting these QA gates in place, you ensure every file in your library is a trusted foundation, not a ticking time bomb.

Navigating Point Cloud Formats and Software

Building an effective point clouds library requires a smart strategy for your file formats and software. The choices you make here are critical. They determine how well your data integrates with BIM and CAD platforms, how fast your machine runs, and whether the data is even usable.

This isn't just an IT decision; it's a production decision. The formats and tools you standardize on will directly impact the efficiency of your Scan-to-BIM workflow.

Choosing the Right Point Cloud Format

Different file formats are built for different jobs. The format that’s perfect for archiving raw data might be painfully slow to work with in Revit. Understanding these differences is key to a smooth pipeline from field capture to final model.

Here are the most common formats in AEC projects:

- E57 (.e57): Think of this as the universal adapter for point clouds. It’s a vendor-neutral, ASTM standard format perfect for archival and sharing data between different systems. It neatly packages raw scan data, panoramic images, and critical metadata into one file.

- RCP/RCS (.rcp/.rcs): These are Autodesk's native formats, purpose-built for speed inside Recap, Revit, and other Autodesk software. An RCS file contains a single scan, while an RCP is a project file that stitches multiple RCS scans together. If your team lives in the Autodesk world, these formats are your go-to for production.

- LAS/LAZ (.las/.laz): The LAS format is the standard for airborne LiDAR but is also common for terrestrial scans. It supports data classification and holds extensive metadata. LAZ is simply the compressed, lossless version of LAS—perfect for shrinking file sizes for storage.

Standardizing on the right production format—like RCP for a Revit-heavy workflow—is a huge piece of the puzzle. It streamlines the entire process.

A common, field-tested strategy is to archive raw data in the E57 format for future-proofing and then convert it to RCP/RCS for active modeling work.

Core Software for Point Cloud Management

No single piece of software does it all, but a few key tools form the backbone of most point cloud management workflows. A team that knows its process uses these tools together to turn raw scan data into a clean, production-ready asset.

The global market for 3D point cloud processing software hit USD 1.15 billion in 2024, reflecting the industry’s need for specialized tools to handle massive reality capture datasets. You can read more about the growth of 3D data processing in architecture and manufacturing.

Here are the essential platforms:

- Autodesk Recap: This is usually the first stop for processing raw scan data. It’s excellent at registering, indexing, and cleaning scans from almost any hardware and exporting them into the highly optimized RCP/RCS formats.

- CloudCompare: An open-source powerhouse. CloudCompare is the tool for detailed analysis, running distance calculations, and handling advanced processing tasks. It's a must-have for deep quality control.

A disciplined approach to organizing point cloud data requires a solid grasp of both the formats and the software that bring your library to life. This knowledge gives your team the confidence to tackle complex datasets. To learn about common hurdles, check out our guide on the top challenges in Scan-to-BIM for large point clouds. By setting clear standards from the start, you ensure your library is a source of clarity, not chaos.

Future-Proofing Your Firm with a Centralized Data Asset

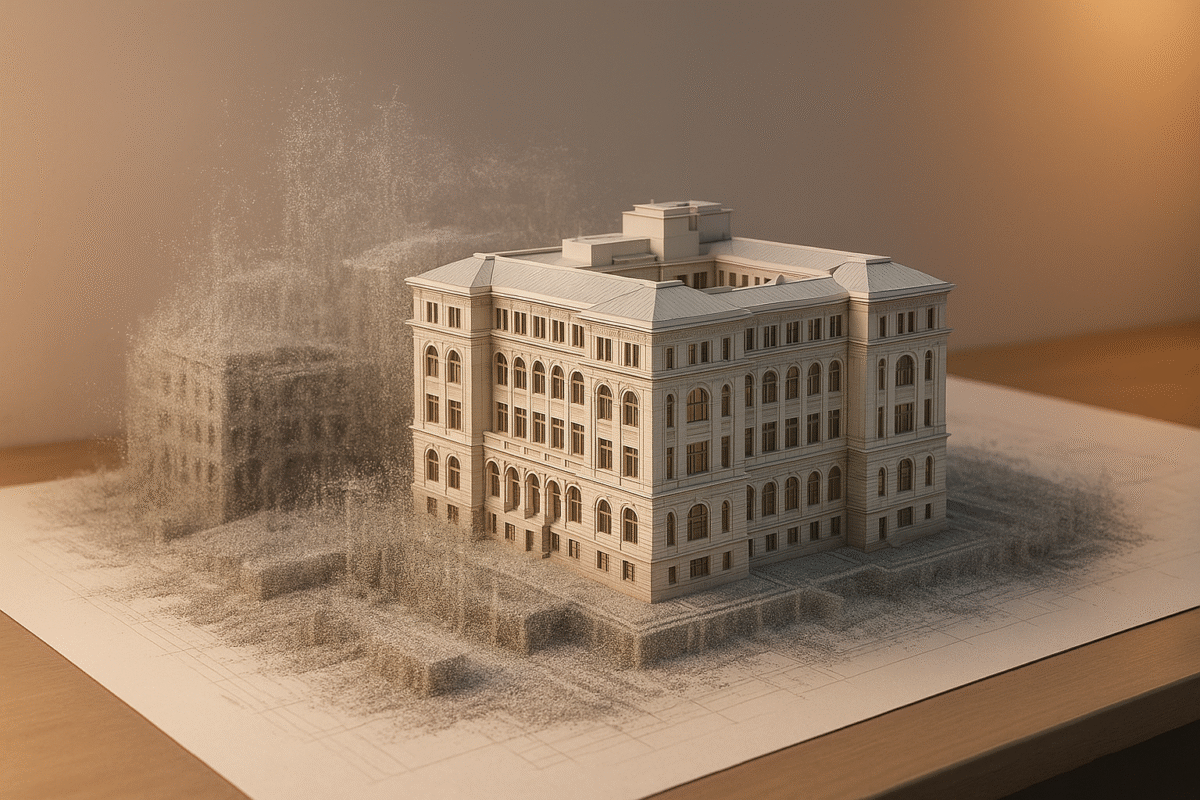

A well-organized point clouds library is more than a fix for today’s workflow bottlenecks—it’s a strategic asset that sets your firm up for future growth. Think of it as evolving from a service provider into a data-driven partner.

When you build a disciplined library, you're not just improving your current Scan-to-BIM workflows. You're creating a proprietary dataset that will drive innovation and protect your margins for years. This shift in mindset treats reality data as a permanent source of truth, opening the door to new services.

From Data Repository to Innovation Engine

The long-term value of your library goes far beyond simple as-built modeling. A structured, clean, and well-documented collection of reality data becomes the perfect foundation for new technologies reshaping the AEC industry.

This is where the real competitive advantage kicks in. A centralized library of reality data is the raw material needed for:

- Training AI Models: Your library becomes the training ground for machine learning algorithms that can automatically recognize pipes, beams, and columns, drastically speeding up modeling.

- Automating QA Processes: By comparing new scan data against the library's historical record, you can automate deviation analysis and quality assurance, flagging construction errors in near real-time.

- Creating Digital Twins: A high-fidelity point cloud is the first layer of a true digital twin, providing the precise geometric backbone for facility management and operational simulations.

Tapping into Machine Vision and Automation

The same principles of good point cloud management are already powering breakthroughs in other industries. Logistics automation, for example, relies heavily on point cloud data for robotic navigation and quality inspection, a market valued at $65.25 billion in 2023 and projected to hit $217.26 billion by 2033. You can dig deeper into how point clouds are fueling 3D perception for automation.

By building a clean, standardized, and searchable point clouds library today, you are creating the proprietary data asset your firm will need to compete tomorrow. It is your firm’s unique, real-world data that cannot be replicated.

This disciplined approach ensures your firm isn't just keeping up with technology but is actively building the infrastructure to lead it. Your library transforms from a simple storage solution for BIM documentation into a strategic engine for innovation.

Your Questions, Answered

Here are clear, actionable answers to questions we often hear from architects, BIM managers, and survey teams trying to build a point cloud library.

Where Do I Start If My Scan Data Is a Mess?

Don't try to organize everything at once. The quickest way to get overwhelmed is by attempting to fix years of chaotic data.

Instead, pick a single pilot project—one that’s either recent or just starting. Use that project to establish your naming convention, folder structure, and a locked-in coordinate system standard. Once you’ve tested the process and know it works, document it and make it the template for all new projects. Progress over perfection is the goal.

Should We Use On-Premise or Cloud Storage?

This comes down to how your team is set up. On-premise storage is great for speed if everyone is in the same office, but it can become a bottleneck for remote collaboration.

Cloud solutions are built for distributed teams, offering secure and scalable access from anywhere. The catch? You need a solid internet connection. For most firms today with multiple offices, field crews, or remote staff, a cloud or hybrid setup offers the flexibility needed for modern point cloud management.

What Is the Most Common Mistake to Avoid?

The single most damaging mistake is failing to enforce a consistent coordinate system across every project. It’s a small oversight that leads to countless wasted hours trying to manually align data in Revit or Recap, and it introduces a huge risk of error.

Establishing one documented project coordinate system from day one is the most critical step you can take to build a functional Scan-to-BIM library. This discipline ensures data from different scans and sources clicks into place, ready for modeling.

Should We Store Raw Scan Data or Only Processed Files?

You need both. Best practice is to maintain a structured archive for raw and processed data. Think of the raw scan data as your absolute source of truth—it should be archived in a dedicated "Raw" folder and left untouched.

Your processed, cleaned, and registered point clouds (like RCP or E57 files) are the working files your team will use daily. These should live in a separate "Production" folder. This separation means you can always trace back to the original data while your production team has fast access to optimized, ready-to-use files.

We help firms build the systems and processes needed for predictable, scalable delivery. If your team is struggling with data chaos, our Point Cloud Naming Standard Guide can provide the framework to get started.