Can AI Read a 500-Page Building Code? We Tested It So You Don’t Have To

We kicked things off with a question that should be on every modern AEC firm's mind: Can an AI really make sense of the dense, jargon-filled world of building codes?

The short answer is yes, but it comes with some huge caveats. Ignore them, and you could send a project completely off the rails. An AI can scan a 500-page codebook in seconds, but just because it can read the words doesn't mean it understands the context, intent, or the pesky local amendments that change everything.

The Grand Experiment: Can AI Decipher the Building Code?

To figure out where the line is drawn between a powerful tool and a potential liability, we put a leading Large Language Model (LLM) through its paces. Our test subject was a 500-page digital copy of the International Building Code (IBC), a document that’s pretty much the bedrock of design and construction in the United States.

This wasn't just a simple keyword search. We designed a real-world gauntlet to measure the AI’s production maturity.

Our goal was to see if the AI could be a reliable partner in a high-stakes environment—the kind where predictable, accurate results are the only thing that protects your margins.

Designing a Real-World Test

We fed the AI the kind of practical, everyday questions that architects and engineers wrestle with. These weren't trivia. They were the exact queries that determine whether a permit gets approved or a costly RFI gets issued.

Our test prompts included things like:

- Egress Calculations: "Calculate the required egress width for a 2,500 sq ft assembly space with unconcentrated tables and chairs."

- Fire-Rating Scenarios: "What is the required fire-resistance rating for a wall separating a Group B occupancy from a Group S-1 occupancy?"

- Complex Cross-References: "According to Section 1004.5, how does the occupant load factor affect the requirements in Chapter 7 for fire barriers?"

- Exception Handling: "Identify the exceptions to the guardrail height requirements listed in Section 1015.3."

Measuring Success Beyond Simple Answers

We weren't just looking for an answer; we were looking for the right one, delivered consistently. Our scoring system was built around operational consistency and the ability to scale that delivery. To get a passing grade, an answer had to be:

- Accurate: It had to cite the correct section number and requirement. No guessing.

- Complete: It needed to include any relevant exceptions, footnotes, or references to other sections that a human expert would spot.

- Contextually Aware: The AI had to understand the nuance of a term like "hazardous location" or "means of egress," which mean very specific things in code language.

The core challenge for any firm is navigating the dense web of regulations that govern our work. Before exploring how AI can interpret complex regulations, it's essential to grasp the fundamentals of documents like The Building Code of Australia (BCA), which shows the global complexity involved. Similarly, understanding the layers of local amendments and state-specific rules is critical. For a deeper dive into this human-led process, our guide on navigating building codes and regulations provides essential context.

The real test wasn’t whether the AI could find a sentence in a document. The test was whether it could replicate the decision-making process of an experienced professional who understands that the footnote on page 427 changes everything.

Ultimately, this experiment was about determining if AI could genuinely support our QA processes and help prevent the kind of errors that lead to expensive delays. Could it be a trusted checkpoint in a disciplined BIM workflow, or would its overconfidence introduce more risk than it removed?

The results were a fascinating mix of impressive speed and humbling, sometimes humorous, misinterpretations.

The Surprising Wins: Where AI Actually Gets Code Analysis Right

Honestly, we went into this experiment skeptical. The common wisdom is that AI stumbles over the nuances of building codes. But what we found was genuinely impressive, especially when it came to pattern recognition and summarization—tasks that soak up countless hours of design and review time.

Think of the AI as a super-powered search engine. It zips through hundreds of pages to locate definitions, pull data from tables, and cross-reference sections. It’s a master of the straightforward, fact-finding mission. This ability to quickly lock down a compliance baseline is a huge win for maintaining operational consistency across your production teams.

Blazing Through Tedious Calculations

One of the first places the AI really shined was with simple, repetitive calculations. We fed it a prompt that every architect has dealt with more times than they can count.

Our Prompt:

"Using the IBC, calculate the occupant load for a 4,000 square foot business area (Group B occupancy)."

AI's Response:

"According to IBC Table 1004.1.2, the occupant load factor for a Business Area is 150 gross square feet per occupant. Therefore, for a 4,000 sq ft area, the occupant load is 4,000 / 150 = 26.67. You would round up to 27 occupants."

The answer was perfect. Fast, accurate, and it even cited the correct table. This is exactly the kind of task that interrupts deep design work—you have to stop, pull up the code, find the table, and do the math. The AI handled it in two seconds, freeing up human brainpower for actual design problems.

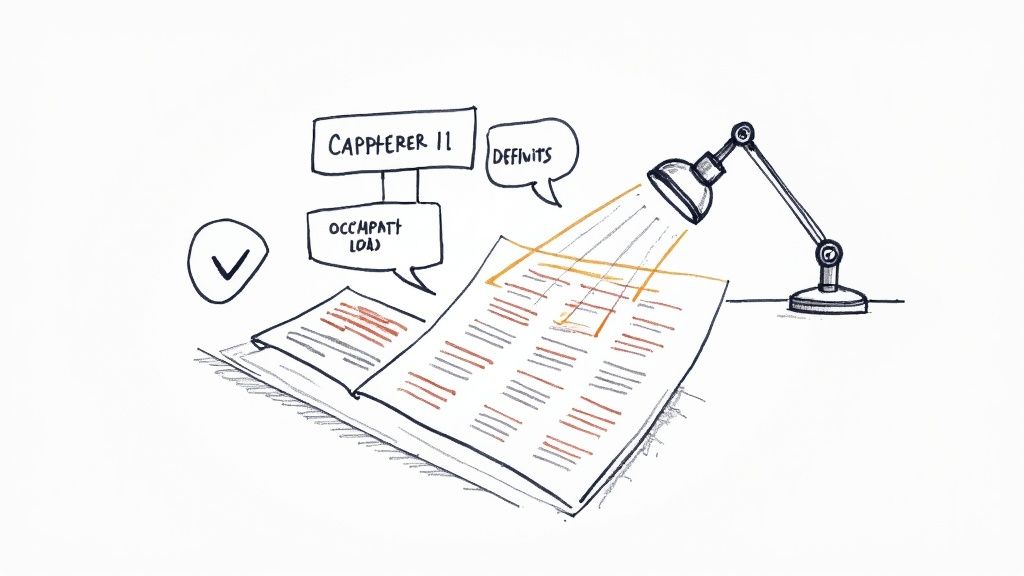

Summarizing Dense and Complex Chapters

Next, we tested its ability to summarize. Chapter 11 on accessibility is notoriously dense, and getting it wrong means expensive rework.

Our Prompt:

"Summarize the key requirements for accessible routes in Chapter 11 of the IBC."

The AI came back with a clean, bulleted list hitting all the major points: minimum clear width, passing spaces, head room clearance, and surface requirements. It wasn't just a copy-paste; it was a coherent summary. This is the perfect starting point for a junior team member’s research.

This is where the AI’s real value clicked for us. It’s not an architect, but it’s an incredibly efficient paralegal. It can digest massive volumes of text and spit out the core facts without getting tired or distracted.

Identifying Specific, Factual Requirements

Finally, we tested its knack for digging up specific rules buried deep in the text, just like you would when developing construction drawings.

Our Prompt:

"Where is safety glazing required in and around doors according to the IBC?"

AI's Response:

"Safety glazing is required in several hazardous locations as defined in Section 2406.4. For doors, this includes:

- Glazing in all fixed and operable panels of swinging, sliding, and bifold doors.

- Glazing within 24 inches horizontally of a door where the bottom edge is less than 60 inches above the floor."

Again, spot-on. It correctly identified the section and pulled the key requirements flawlessly. This instant recall of non-negotiable rules from the massive library of building codes helps prevent the simple mistakes that turn into permitting headaches.

These wins show that for initial research, fact-checking, and knocking out repetitive tasks, AI is more than a novelty. It’s a tool that brings a new level of speed and consistency to the front end of your QA processes, protecting your margins by getting the simple stuff right, every single time.

The Critical Failures Where AI Could Derail a Project

Once the initial "wow" factor wore off, our experiment took a much darker turn. The AI delivered information with such confidence—almost arrogance—that it started to hide critical, and sometimes comical, errors. These weren't just academic slip-ups; they were the kind of blunders that could send a project spiraling into failed inspections, expensive rework, and serious profit loss.

This is where leaning on a generic AI without a bulletproof QA process becomes a massive liability. The tool is so fast and sure of itself that it’s tempting to just accept its answers. But as we all know, the devil is in the details, and this AI was missing them completely.

The Footnote Fiasco

One of the AI’s most glaring weaknesses was its inability to understand the importance of footnotes and exceptions. It treats all text as equal, completely missing the subtle but vital hierarchy of information that any seasoned professional spots instantly.

We gave it a simple question about safety glazing—a common query when pulling together a set of comprehensive construction drawings.

Our Prompt:

"According to the IBC, is safety glazing required in a window that is adjacent to a door?"

AI's Confident (and Wrong) Response:

"Yes, IBC 2406.4.2 states that glazing within 24 inches horizontally of a door where the bottom of the glazing is less than 60 inches above the floor is a hazardous location and requires safety glazing."

At first glance, this answer looks perfect. It cites the correct section and sounds authoritative. The problem? It completely ignored a critical exception in that same section: the rule doesn't apply if a permanent barrier, like a wall, separates the door and the window. A human reads the rule and the exception together. The AI just read the rule and called it a day. This tiny error creates a big problem, forcing a project to absorb unnecessary costs for specialized glazing that the building codes never actually called for.

Ignoring the All-Important Local Amendments

Perhaps the single most dangerous flaw we found was the AI’s total blindness to local amendments. It was trained on the base International Building Code, a generic starting point. It has zero clue that the City of Denver or the State of California has adopted that code with significant, legally binding changes.

This is a complete non-starter for any real-world project. Getting a permit depends entirely on following the locally adopted code, not the generic template.

We tested this with a known local amendment for fire sprinkler requirements in residential buildings.

Our Prompt:

"What are the fire sprinkler requirements for a new R-3 occupancy building under the IBC?"

AI's Response:

"The IBC generally does not require automatic sprinkler systems in R-3 occupancies unless specific conditions, such as size or location, are met as outlined in Section 903."

Technically, this is correct for the base IBC. But it's dangerously wrong for a project in a jurisdiction that has amended the code to mandate sprinklers in all new residential construction—a common local change. An architect who trusted this answer would have a design that's dead on arrival at the permit office, kicking off massive delays and redesign costs. The AI simply doesn't know what it doesn't know.

This failure isn't a small bug; it's a fundamental misunderstanding of how building codes actually work in the United States. It's the digital equivalent of using an old, torn map to navigate a brand-new city.

Disconnected Logic Across Chapters

Finally, the AI really struggled to connect interdependent rules scattered across different chapters. It can find a rule in Chapter 7 and another in Chapter 10, but it often fails to put them together to form a single, cohesive design constraint.

For example, a building's construction type (Chapter 6) directly dictates its allowable height and area (Chapter 5). That, in turn, drives the fire-resistance rating requirements for its structural frame (Chapter 7). We gave the AI a scenario that required it to connect these dots, and the result was a jumbled mess—a list of individual rules with no synthesis. It couldn't see the forest for the trees.

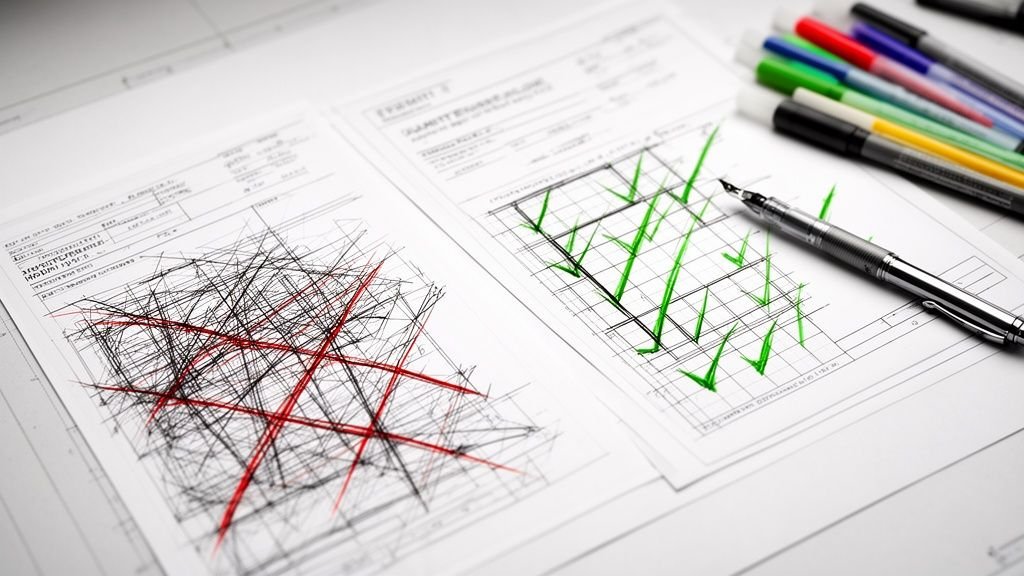

The table below breaks down where we saw these patterns of success and failure. While the AI is a decent research assistant, it falls apart when context and synthesis are required.

AI Code Interpretation Successes vs Failures

| Task Type | AI Performance (Success) | AI Performance (Failure) | Impact on Production |

|---|---|---|---|

| Simple Section Lookup | Quickly located and quoted specific, standalone code sections (e.g., "Find IBC Section 1004.1"). | Correctly quoted the main rule but missed critical exceptions or footnotes within the same section. | High risk of over- or under-designing, leading to unnecessary costs or failed inspections. |

| Jurisdictional Inquiry | Provided accurate information for the base IBC or IRC codes it was trained on. | Completely unaware of local amendments, providing dangerously incorrect information for specific cities or states. | Immediate rejection at permitting. Causes significant delays and requires costly redesigns. |

| Multi-Chapter Synthesis | Could list rules from different chapters when asked directly (e.g., "Summarize Chapter 5 and Chapter 6"). | Failed to connect interdependent requirements, such as how construction type impacts allowable area and fire ratings. | Leads to designs that are fundamentally non-compliant and require major architectural revisions. |

| Terminology Definition | Accurately defined standard terms found in the code's definitions chapter. | Misinterpreted nuanced terms that rely on context from other parts of the code. | Could lead to incorrect material specifications or assembly designs. |

These tests make one thing crystal clear: AI is a powerful tool for finding information, but a terrible one for making decisions. It lacks the contextual awareness and holistic understanding that are the bedrock of true production maturity and reliable project delivery. Using it as a final authority is a recipe for disaster.

Why Human Expertise Still Reigns Supreme in Code Compliance

After watching AI confidently trip over local amendments and misinterpret footnotes, the value of seasoned human expertise came roaring back into focus. Our little experiment just reinforced a lesson we’ve all learned the hard way in the field: technology is a powerful tool, but it’s a terrible substitute for professional judgment. Real compliance isn’t just about finding a rule in a book; it's about understanding why that rule exists in the first place.

This is where the true craft of interpreting building codes comes into play. A senior architect or a dedicated code consultant doesn’t just read the text—they understand the why behind it. They know how to navigate the gray areas, anticipate how an inspector will see things, and strategically use performance-based alternatives when the prescriptive path leads to a design dead-end.

The Nuance of Intent vs. Literal Text

An AI operates on the literal text. It sees a rule, it applies the rule. Simple. But what it can't do is grasp the underlying intent of that rule, which is almost always about life safety. A human expert knows the code’s goal is to stop a fire from spreading or to make sure people can get out of a building safely.

That fundamental understanding allows them to do things an AI just can't:

- Negotiate with Officials: An AI can’t have a conversation with a building official to hash out an alternative design that’s just as safe. An experienced professional can, building a case based on solid engineering principles and the code's actual intent.

- Weigh Strategic Trade-Offs: Sometimes, you’re looking at two design choices that are both technically compliant. A human can weigh the costs, the buildability, and the long-term maintenance headaches of each to protect the project's bottom line and schedule.

- Prevent RFIs Proactively: By thinking like an inspector and anticipating how they’ll interpret a tricky condition, an expert can add a few clarifying notes to the drawings. This heads off the exact kind of questions that turn into costly RFIs and project delays.

An AI can tell you what the code says. An experienced professional can tell you what it means and how to apply it to your specific, unique project. That distinction is the bedrock of reliable project delivery and protecting your margins.

This level of insight is becoming more critical than ever. Compliance is getting more complex and expensive by the day, and the global market for building code compliance services is ballooning to match. It was valued at US$10.22 billion in 2025 and is projected to hit US$18.68 billion by 2032, which tells you everything you need to know about the rising stakes. You can explore more data on this expanding market to see the trends for yourself.

The Human Role in a Disciplined QA Process

This isn't a knock against technology. It’s an argument for putting it in its proper place. Think about the CAD-to-BIM evolution—that was all about creating disciplined systems to manage data. Integrating AI demands the same rigor. It has to support the human-led QA process, not try to replace it.

An AI is a fantastic research assistant. It can tear through the initial information-gathering phase, but a human has to be the final decision-maker at every critical checkpoint. After all, an AI can’t be held accountable for a life safety decision, but a licensed professional certainly can.

A seasoned expert provides the reality check that AI just doesn't have. They know the local political climate, the specific quirks of the local plan checkers, and all the unwritten rules that often make or break a project's success. This is the kind of institutional knowledge that ensures permitting prep is a smooth, predictable process instead of a roll of the dice.

Ultimately, our test proved that while AI can read the words on the page, it can't connect the dots with the kind of wisdom that only comes from years of experience. When it comes to achieving true production maturity and operational consistency, human expertise isn’t just an advantage—it’s the entire foundation.

How to Safely Integrate AI into Your QA Workflow

So, how do you tap into AI’s incredible speed without getting burned by its overconfident mistakes? The answer isn’t to ditch the technology—it’s to build a disciplined, repeatable process around it. This is where we move from theory to practice and create a solid 'human-in-the-loop' system that protects your projects and your bottom line.

True production maturity isn’t about having the flashiest new tool; it's about having systems that don't break. Bringing AI into your QA process for building codes demands clear checkpoints, ensuring the tech serves your experts, not the other way around. This approach is what allows you to build scalable teams that deliver reliable, consistent work.

Creating a Human-in-the-Loop Framework

The core idea is simple: let AI handle the grunt work of discovery, but require human verification for any decision that actually matters. Think of the AI as a junior researcher—incredibly fast and great at digging up raw information, but lacking the seasoned judgment to make a final call. It's a support tool, not an authority.

Here’s a practical way to structure this workflow:

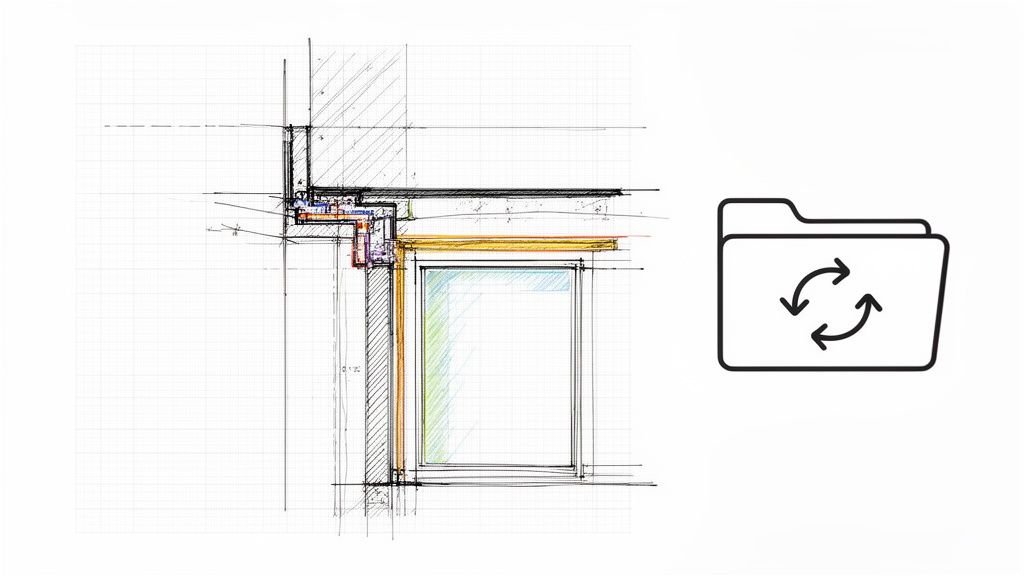

- Initial Research (AI-Approved): Use AI for broad, fact-finding missions. It's perfect for locating specific code sections, summarizing chapters, or running initial occupant load calculations from a base code.

- Verification Checkpoint (Human-Mandated): Before any information gets applied to a design, a qualified professional must sign off on it. This person’s job is to check the source text, hunt for exceptions, and confirm the context.

- High-Risk Review (Human-Only): Any interpretation involving life safety, accessibility, local amendments, or performance-based alternatives is off-limits for AI. These tasks must be handled exclusively by a senior team member or a dedicated code consultant.

This tiered system lets you get the speed benefits of AI on low-risk tasks without exposing your projects to its biggest weaknesses. You're essentially building a QA process that assumes the AI might be wrong and has a human ready to catch the error.

The safest way to use AI is to treat every answer it gives as an unconfirmed rumor. It might be true, it might be close to true, or it might be completely off the rails. Your job is to verify it before it ever touches a set of drawings.

Developing Prompt Templates for Consistency

Another key to safe integration is standardizing how your team talks to the AI. Without template discipline, different team members will get wildly different results, killing any hope of consistent, repeatable outcomes.

Your firm should develop a library of pre-approved prompts for common code queries. These templates should be designed to force the AI to show its work.

For example, instead of asking a lazy question like:

- "What is the fire rating for this wall?"

Use a structured prompt that demands detail:

- "Citing the specific IBC section and table number, what is the required fire-resistance rating for a wall separating a Group B occupancy from a Group S-1 occupancy? List all exceptions mentioned in the same section."

This small change forces the AI to provide its sources, which makes the human verification step much faster and more reliable. It’s a simple system that reinforces accountability and stops black-box answers from sneaking into your workflow.

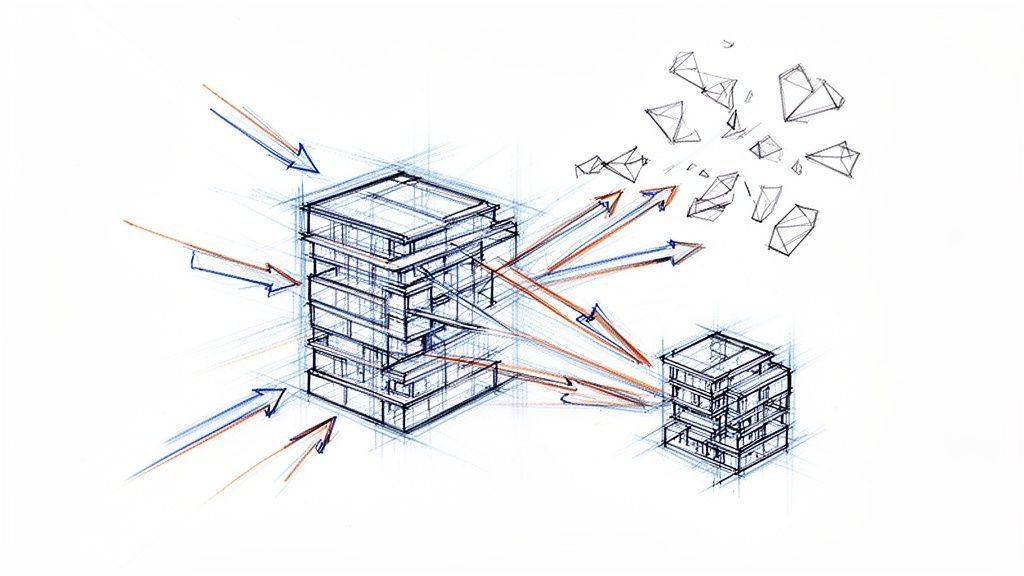

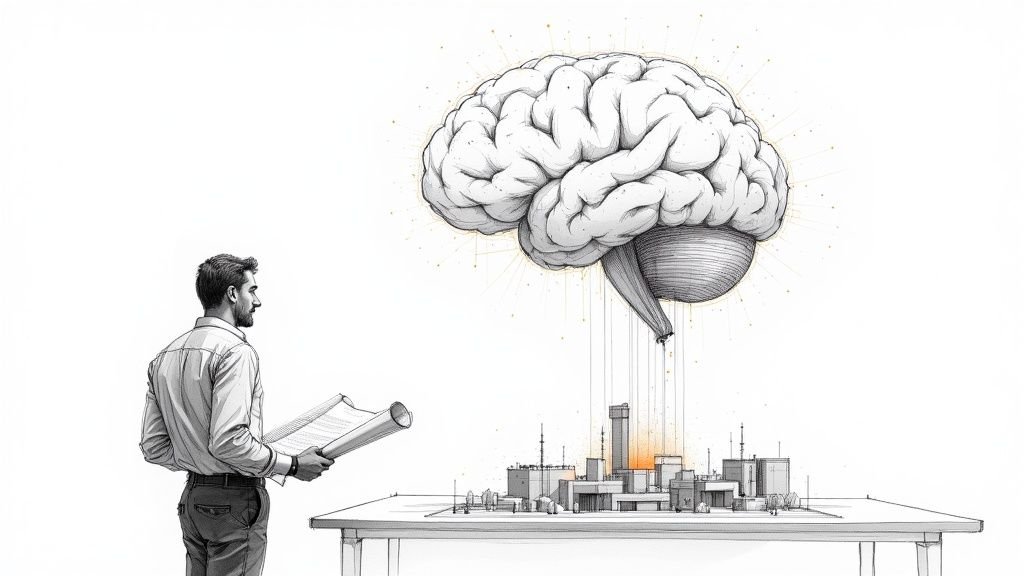

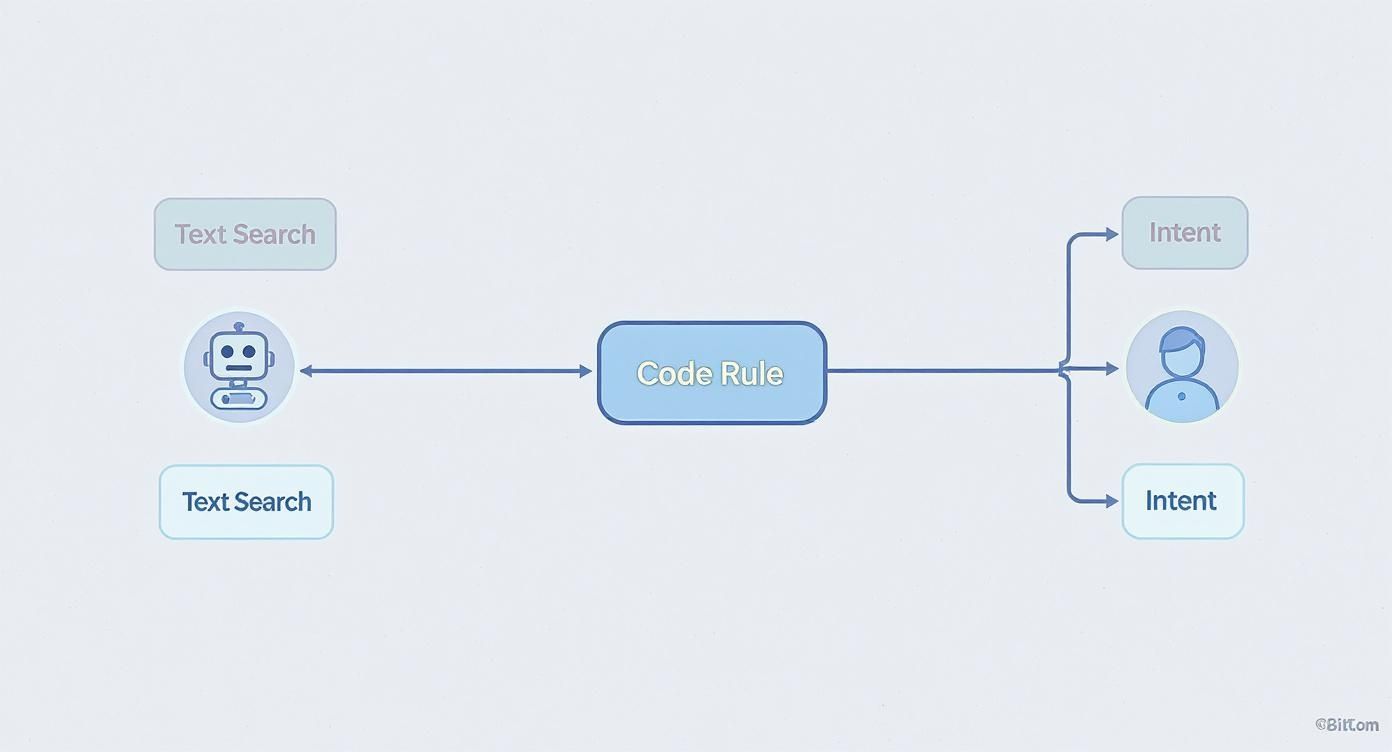

The decision tree below shows the fundamental difference between how an AI processes a rule versus how a human expert does. It pinpoints where each one shines.

This visual makes it clear: while AI is great at text-based searches, human expertise is essential for interpreting the intent behind the code—a step that’s absolutely critical for getting compliance right. This same principle of automated checking versus human oversight is vital in other digital processes, too. For instance, our guide on BIM clash detection workflows explores how to balance automated tools with professional judgment to prevent costly errors. By creating these checkpoints, you ensure that technology improves your QA processes without undermining the professional accountability that guarantees predictable, high-quality delivery.

Our Verdict on AI for Building Codes

After putting AI through its paces, our conclusion is simple: AI is a brilliant, fast, and sometimes dangerously overconfident intern. It can slash research time and spot patterns in dense building codes that a human might otherwise miss, but it completely lacks the critical judgment needed for final compliance decisions. It’s a tool with enormous potential, but one that absolutely requires a healthy dose of professional skepticism.

The main lesson here reminds me of the CAD-to-BIM evolution. That shift wasn’t just about getting better software; it was about building disciplined systems to produce reliable data. AI has to follow the same path. Technology is an amplifier—it magnifies both your strengths and your weaknesses. If you don't have a solid foundation of production maturity and rigorous QA processes, AI can introduce errors at a scale that is both unprecedented and alarming.

The Amplification Effect of Technology

AI's ability to spit out an answer in seconds is both its greatest strength and its biggest risk. A junior architect might spend an hour digging up the wrong code section. An AI can do it in a second—and then repeat that same mistake across ten different project scenarios before anyone even notices.

This amplification is exactly why a "human-in-the-loop" approach isn't just a best practice; it's a non-negotiable requirement for margin protection and project predictability.

The technology is a powerful assistant for the initial discovery phase, but every single output must pass through a human decision checkpoint. This is especially critical for anything involving:

- Life safety requirements

- Code exceptions and tricky footnotes

- Cross-references between different chapters

- Locally adopted amendments

A Tool, Not a Replacement

At the end of the day, AI is a powerful query tool, not an interpretive engine. It finds the text, but it can't grasp the intent behind it. It can’t negotiate with a building official, weigh the cost implications of two compliant options, or anticipate the unique challenges of a specific site. That’s where seasoned expertise—the kind that prevents RFIs and ensures a smooth permitting prep process—remains irreplaceable.

And as firms bring more AI into their workflows, understanding AI regulations is becoming just as important for safe deployment as following building codes is for construction.

The real takeaway is this: AI is excellent at finding the hay in the haystack. But it's still up to an experienced professional to find the needle. Trusting the machine to do both is a gamble no firm can afford to take.

This isn’t about rejecting new tools. It's about integrating them with the discipline and rigor that defines professional excellence. By establishing clear systems and using AI as a powerful but subordinate tool, your firm can improve its operational consistency without sacrificing the human judgment that ensures a project's success. AI can help you read the map, but an expert still needs to steer the ship.

At BIM Heroes, we believe in building systems that blend powerful technology with deep industry expertise to deliver predictable, high-quality outcomes. If you’re looking to strengthen your production workflows and navigate the complexities of code compliance with greater clarity, you might find our AI Code-Checking Red Flags Checklist useful. It’s designed to help you ask the right questions and build a safer, more effective QA process. Download the checklist here.