What BIM Managers Fear Most About AI (And Why They’re Right)

For every BIM manager feeling the heat, the constant buzz about AI probably sounds less like a solution and more like a problem. The hype is all about speed and automation, but from where you stand, you see the potential for total chaos. You see a direct threat to the very systems you’ve spent years building to guarantee predictability and protect project margins.

Your hesitation isn’t about resisting technology. It's a pragmatic defense of your firm's hard-won production maturity.

The Real Anxiety AI Brings to the BIM Manager

Most of the industry talk around AI completely misses the day-to-day stress it creates for the person in charge of data integrity. While the C-suite sees dollar signs and efficiency gains, you see the very real risk of losing control over the meticulous BIM workflows you’ve curated. It’s that quiet dread that AI-generated content will poison your project ecosystem, turning a well-managed model into a massive liability.

This isn't some abstract worry. It’s a grounded fear that comes from years of enforcing discipline and consistency. You worked tirelessly to drag your firm from the wild west of CAD to the structured world of BIM, and now AI feels like a black box capable of undoing all that progress in a single afternoon.

From Control to Chaos: The Core Fears

This anxiety comes from specific, tangible risks that directly threaten your core duties. These aren't just hypotheticals; they're the logical next step when you introduce ungoverned automation into a complex production environment.

Here's what's likely keeping you up at night:

- Unverified Geometry: Sure, AI tools can pump out thousands of elements in minutes, but who is checking if any of it is accurate or even buildable?

- Mis-Tagged Families: Automated placement could easily grab the wrong family or slap incorrect data onto an element, corrupting your schedules and blowing up the cost estimate.

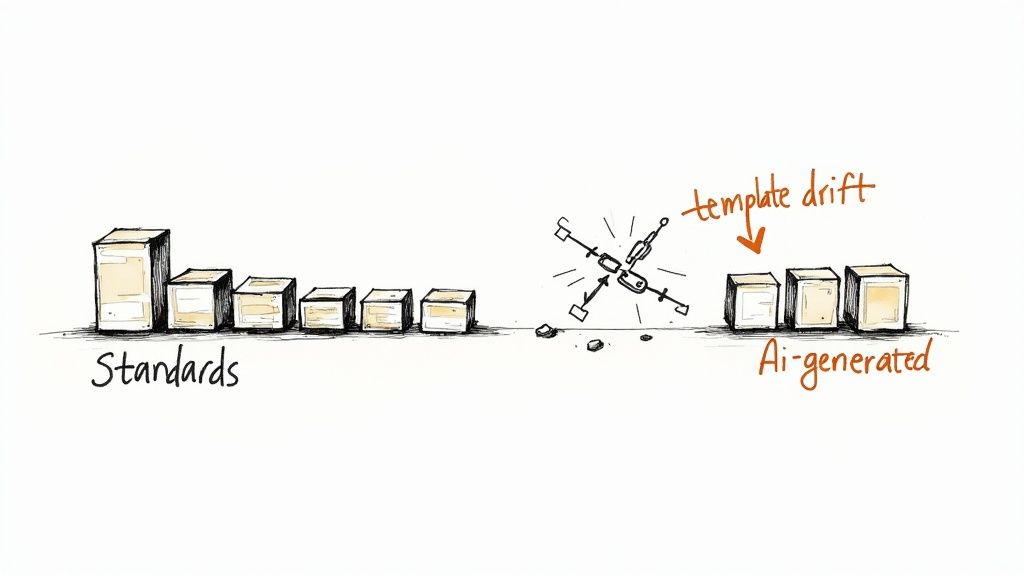

- Inconsistent Content & Template Drift: An AI trained on messy public datasets might start injecting content that completely ignores your company's standards and templates, eroding quality.

- Massive QA Overload: Instead of making your job easier, AI could bury you. You could end up spending all your time quality-checking an endless flood of machine-generated data, preventing RFIs at a much higher cost.

"Your hesitation to embrace AI isn't a failure of vision. It’s the responsible act of a manager who understands that in production, consistency isn't just a preference—it's the foundation of profitability and predictability."

The pressure is immense. You're the guardian of the "single source of truth," but now AI wants to introduce a thousand unverified "suggestions." The whole point of moving from CAD to BIM was to establish rules. Now, you’re being told to trust an algorithm that might not understand—or care about—any of them. The rise of AI in BIM marks a critical turning point, and it demands a whole new layer of governance.

This article is here to tell you that your concerns are valid. We’re going to dissect these fears, show you why they’re justified, and—more importantly—give you a practical path forward. The answer isn't to block AI, but to manage it with the same discipline you apply to everything else in your BIM environment.

It’s about building a framework that protects your projects, your team, and your sanity. We don't sell hours; we sell clarity, systems, and reliable delivery.

Fear 1 Losing Control Over BIM Standards

As a BIM Manager, you’re the guardian of order. Your job is to create systems, build templates, and establish workflows that transform the natural chaos of design into a predictable, profitable production line. So, the fear that AI might unravel all that hard work isn't just valid—it’s a survival instinct honed over years of protecting project integrity.

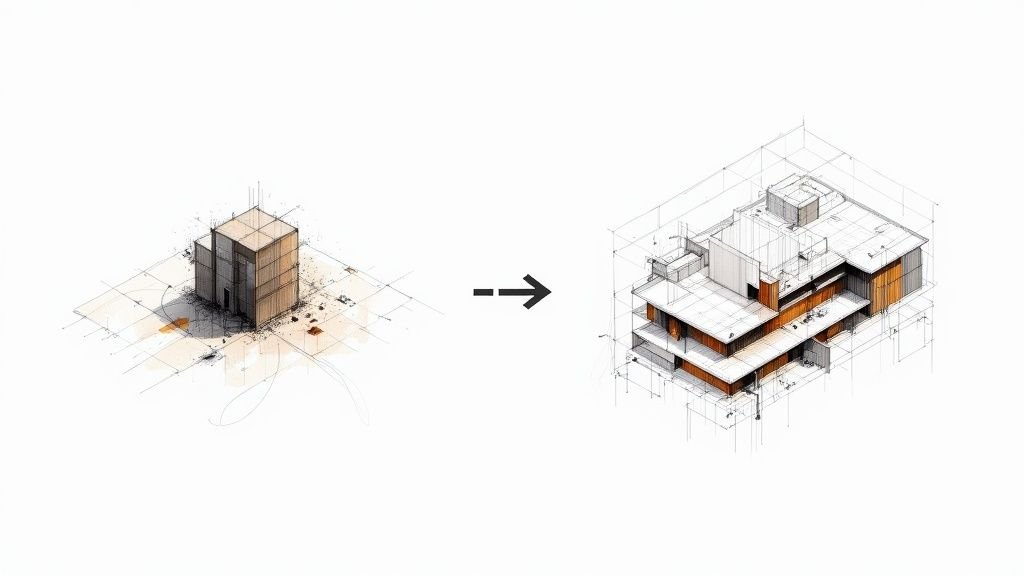

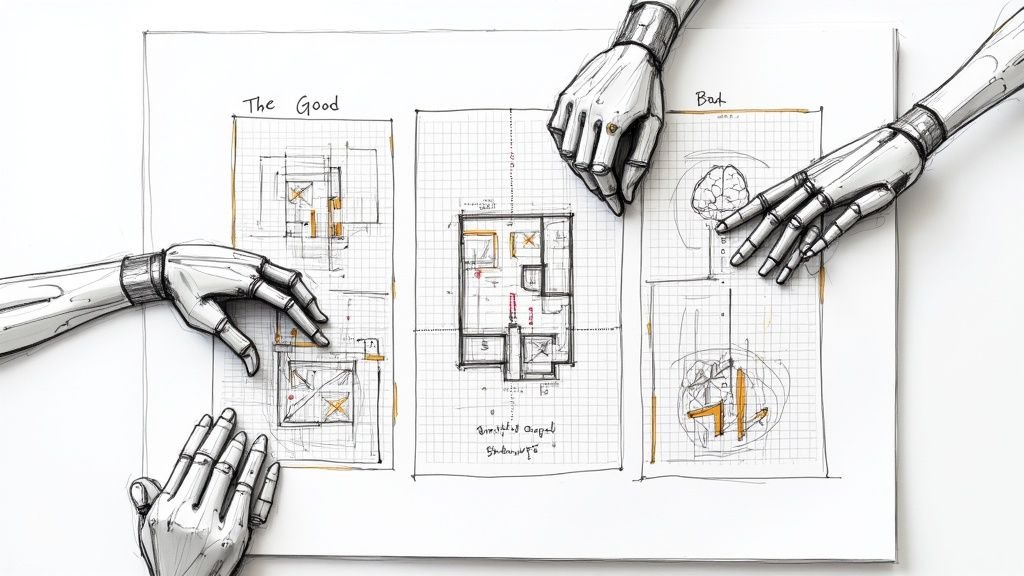

This fear really boils down to one corrosive concept: template drift. It’s the slow, almost invisible erosion of your firm's established standards. An AI tool, trained on the wild west of internet models, might spit out a wall assembly that looks right but is tagged all wrong, uses a non-standard family, or contains geometry that completely violates your modeling protocols.

Don't mistake this for a simple housekeeping issue. It's a direct threat to your entire operation's consistency. Every rogue family and mis-tagged element corrupts your data, putting everything from cost estimates to permitting prep at risk.

The Uncontrolled Supply Chain Analogy

Think about your BIM environment like a high-tech manufacturing facility. You have a list of vetted suppliers (your content libraries) and a strict QA process for every single component that hits the assembly line (your model). This guarantees every part fits, functions, and carries the right data.

Now, imagine an AI tool acting like an unauthorized procurement agent. It starts pulling in parts from unverified, third-party suppliers just because they look close enough.

One unchecked, AI-generated component is like a counterfeit part in a high-performance engine. It might look the same, but under pressure, it fails—and takes the entire system down with it, destroying predictability and eroding margins.

This is exactly what ungoverned AI can do to your digital ecosystem. It injects "counterfeit" digital assets into your projects, poisoning the model from the inside out. A single non-compliant door family could throw off an entire schedule. Unverified geometry can create thousands of phantom clashes, burying your team in useless RFIs.

Protecting Your Digital Assembly Line

The global Building Information Modeling market is exploding, projected to grow from USD 8.53 billion in 2024 to USD 23.74 billion by 2033, according to Precedence Research, all driven by the promise of greater efficiency. But this growth only magnifies the need for rock-solid governance as new tech enters the scene. The stakes are getting higher, which is why standards have never been more critical.

Your core responsibility as a BIM Manager is to protect that production process. The entire shift from CAD to BIM was a monumental effort to establish control over data. Allowing uncontrolled AI into this environment feels like a huge step backward, reintroducing the very chaos BIM was designed to eliminate.

The consequences of template drift are severe and hit the bottom line hard:

- Compromised Data Integrity: Your model stops being a reliable source of truth. Forget accurate takeoffs or dependable scheduling.

- Failed Permitting Prep: Models riddled with inconsistent data or non-standard elements are far more likely to get kicked back by regulatory bodies, leading to expensive delays.

- Increased Rework: Downstream teams—from engineers to fabricators—are counting on your data's accuracy. A corrupted model is a guarantee of expensive rework down the line.

Maintaining control isn't about blocking progress. It’s about making sure any new tool, AI or otherwise, plays by the rules of the disciplined framework you’ve built. Your company's profitability hinges on the operational consistency that comes from strong BIM standards. That is precisely what's at stake. The fear of losing control is really the fear of losing the predictability your firm sells to its clients.

Fear 2: The Explosion of Unverified Content and QA Load

AI evangelists love to talk about accelerating content creation. To an experienced BIM manager, that promise sounds less like a gift and more like a direct threat. It’s a potential tsunami of unverified geometry and data crashing into your carefully managed project environment. This isn’t just about more work; it’s about a fundamental shift in the type of work, one that could easily bury your production team.

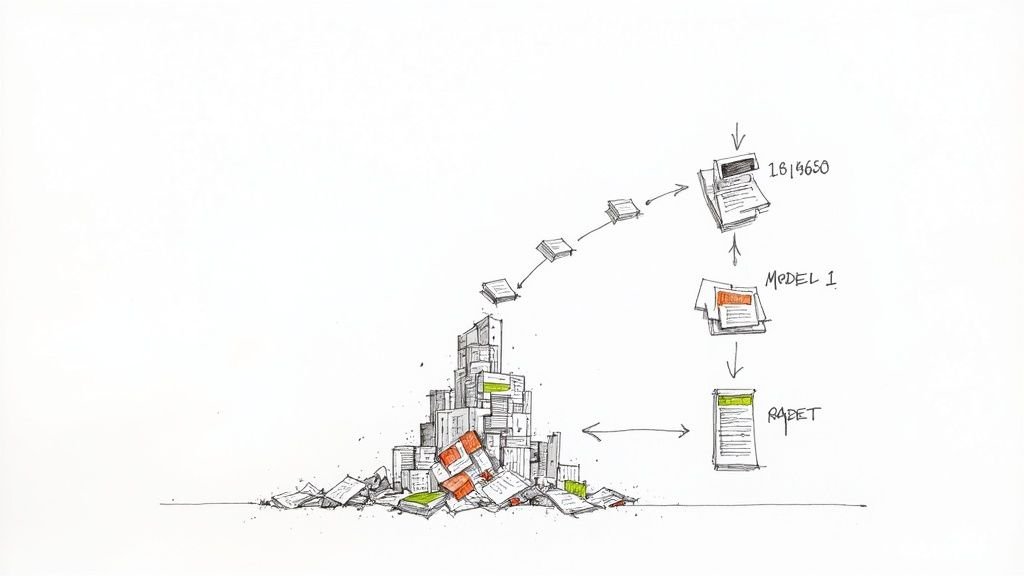

The fear is that AI doesn't actually reduce your workload at all. Instead, it just shoves the burden from controlled creation into a chaotic, high-stakes quality assurance phase. Your role dangerously morphs from proactive systems builder to reactive digital firefighter, stamping out endless spot fires of bad data.

The QA Burden Shifts From Geometry to Semantics

Traditionally, your QA process has been heavily focused on geometric coordination. You run clash detection, check clearances, and make sure the physical model is actually buildable. These are known quantities with well-established BIM workflows, even if they're complex.

Ungoverned AI changes the game completely. The new QA nightmare isn’t just about clashes; it’s about semantic and data validation—a much more insidious and difficult problem to solve.

Your primary concern is no longer just "Does this pipe hit this duct?" It becomes "Did the AI that placed this pipe understand its material spec, pressure rating, and the local code requirements for its insulation?" One is a simple geometric check; the other is a complex data audit that requires deep domain knowledge.

This shift creates a massive bottleneck. You can't just rely on automated software to tell you if the data is correct, only if it's present. The real burden of verification falls squarely on you and your already-strained production teams.

How AI Shifts the BIM Quality Assurance Workload

When uncontrolled AI-generated content enters the picture, it completely changes the focus of your quality assurance efforts. The work moves from tangible, visible checks to abstract data validation that is far more time-consuming to audit. This table breaks down how that shift impacts everyday tasks.

| QA Task Area | Traditional BIM Workflow Focus | Ungoverned AI-Assisted Workflow Focus |

|---|---|---|

| Element Verification | Ensuring elements are correctly placed and modeled according to the design documents. | Verifying the origin and data integrity of thousands of AI-generated elements. |

| Clash Detection | Identifying and resolving geometric interferences between major building systems. | Investigating "phantom clashes" caused by unverified geometry with incorrect dimensions. |

| Data Validation | Checking that key parameters (e.g., fire ratings, model numbers) are filled out. | Auditing the semantic accuracy of every parameter to ensure it meets performance specs. |

| Standards Compliance | Confirming that families, naming conventions, and line styles adhere to the BEP. | Hunting for non-standard, AI-injected content that causes template drift and corrupts data. |

The core takeaway is simple: the QA process becomes less about what you can see and more about what you have to dig for. Each AI-generated element carries an invisible payload of data that requires human expertise to validate, dramatically increasing the QA workload.

From Gatekeeper to Bottleneck

This explosion in QA demand turns the BIM Manager from a strategic gatekeeper into an operational bottleneck. Every AI-generated output requires a human checkpoint, and that checkpoint is you. This completely undermines the predictability of your delivery model, as schedules become dependent on your ability to manually audit an overwhelming volume of content.

This increased load doesn't just threaten timelines; it erodes margin protection. Every hour spent validating an AI's mistake is an unbillable hour that eats directly into project profitability. The anxieties around this new wave of unverified content are understandable, especially when looking at the rapid evolution seen in discussions around ChatGPT and similar tools.

The argument is simple: without a rigorous governance framework, AI doesn't reduce work. It just moves the effort to the most critical and time-consuming phase of the project—final quality assurance. This amplifies risk, kills predictability, and makes the successful delivery of clear construction project drawings and models nearly impossible. Your fear isn't about the technology; it's a calculated response to the operational chaos it threatens to create.

A Grounded Path Forward: Building Your AI Governance Framework

Acknowledging the risks is the first step. Taking decisive action is the next. The solution isn't to ban AI or pretend it doesn't exist; it's to impose the same rigorous discipline on it that you demand from your human teams. For a BIM manager, this means building a robust AI governance framework—a set of rules that forces AI to work within your established production systems, not outside of them.

This isn't about stifling new ideas. It's about protecting your firm's operational consistency and profitability. A clear governance framework puts you back in command, turning you into the strategic leader who guides AI adoption safely and ensures it enhances—rather than compromises—the predictability you deliver.

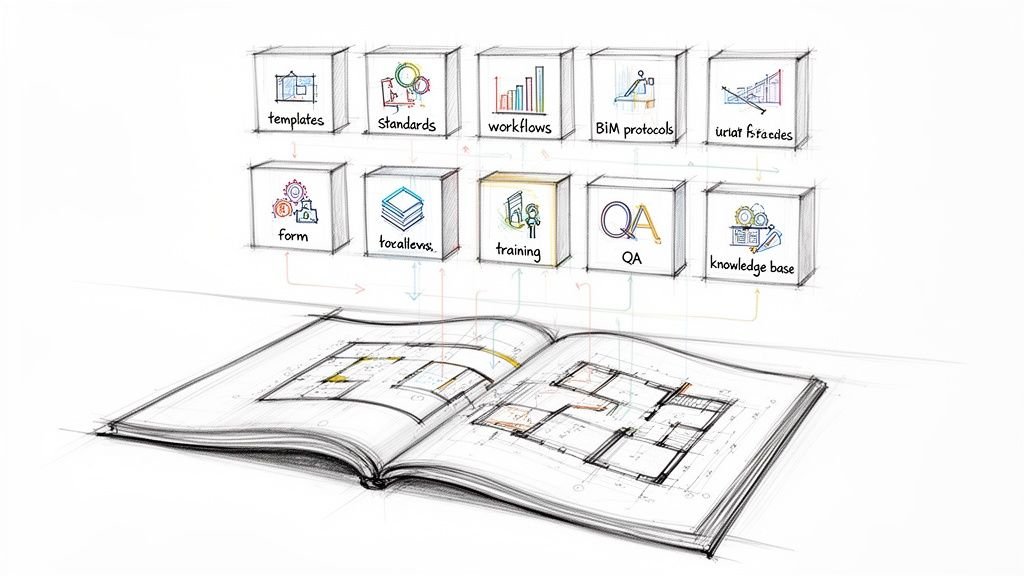

The Four Pillars of AI Control

An effective governance framework isn’t some abstract policy document; it's a practical, actionable system built on four key pillars. Each one directly addresses the core fears of losing control and being overwhelmed by unverified content. Think of these as the essential decision checkpoints for any AI-assisted workflow.

- Establish Clear AI Usage Guidelines: Define exactly which tools are approved, which are experimental, and which are forbidden. A simple "red, yellow, green" system works surprisingly well, classifying tools based on their risk to data integrity and template discipline.

- Create Controlled 'Sandbox' Environments: No new AI tool should ever touch a live project model without rigorous testing. A sandboxed project file allows your team to evaluate a tool's output against your BIM standards in a secure, isolated setting.

- Implement Rigorous Audit Trails: Mandate that any model element created or modified by an AI tool is tagged with a specific parameter. This creates a digital paper trail, allowing you to quickly isolate and audit AI-generated content.

- Develop Critical Evaluation Training: Train your team not just on how to use AI, but how to critically question its output. The goal is to cultivate healthy skepticism, turning your users into a frontline defense against bad data.

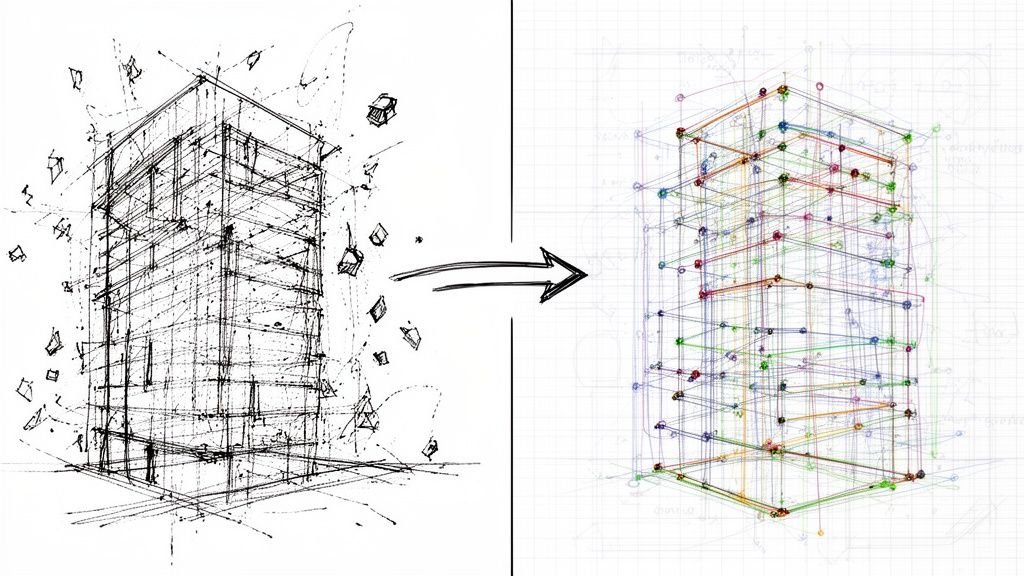

Introducing AI forces a fundamental shift in quality assurance. The focus moves beyond simple clash detection to a more rigorous, data-centric validation process.

As the infographic shows, the real challenge isn't just managing more content. It's fundamentally changing how we verify both geometric and data accuracy.

From Aspiration to Implementation

Putting this framework into practice is how you move from anxiety to action. The goal is to harness AI's speed but constrain it within the guardrails of your existing workflows. For a deeper dive into establishing robust AI oversight, exploring the principles of Artificial Intelligence Governance can provide a valuable theoretical foundation for your practical rules.

Think of it this way: your role is to ensure every AI-generated component is treated like a new submittal. It must be vetted, verified, and approved before it's accepted into the project.

A governance framework isn't about saying 'no' to AI. It's about defining the terms of 'yes.' It's how you ensure that automation serves your production system, rather than letting your system be dictated by the chaos of automation.

Making Governance a Production Reality

To make these pillars a reality, start small and be specific. Your AI Usage Guidelines shouldn't be a 50-page document nobody reads; it should be a one-page checklist that a project architect can understand in five minutes.

- Specify Approved Tools: List the exact names of AI plugins or software that have passed your sandbox testing.

- Define Prohibited Actions: Forbid using public generative AI platforms with proprietary project data to prevent privacy breaches.

- Mandate Verification Steps: Require a manual sign-off from a senior team member for any AI-generated design option before it's presented to a client.

By implementing these practical rules, the BIM manager reasserts control over the production environment. You shift from being a reactive problem-solver to a proactive systems architect, building the necessary infrastructure to manage new technologies safely. This is how you protect your firm's margins and ensure the scalable, predictable delivery of every single project.

Turning AI From a Threat Into a Strategic Advantage

The very fears that keep you up at night—unverified geometry, template drift, and QA overload—are exactly what make a disciplined BIM manager more valuable than ever. Firms that dive headfirst into AI without a plan are heading for production chaos. Their projects will be plagued by rework, and their margins will erode under the weight of unmanaged data.

In sharp contrast, firms with strong BIM leadership will gain a massive competitive advantage by implementing a controlled, deliberate AI adoption strategy. It’s a classic case of discipline versus disorder. The winning firms won't be the ones with the most AI tools; they’ll be the ones with the best governance.

This is your opportunity to reframe the entire narrative. You’re not a barrier to new technology. You are the strategic leader who can turn a potential threat into your firm's greatest operational asset.

From Defense to Offense With AI Governance

A well-governed AI strategy isn't about restriction; it's about making your projects more predictable. The goal is to automate the repetitive, low-risk tasks that eat up your team's valuable time. This frees up your most skilled people for high-value problem-solving—the kind of work that actually drives project success and protects profitability.

This is the core of your playbook. You can let AI handle mundane tasks like clash detection pre-screening or annotation placement, but only within strict guardrails that you define.

- Protect the Core System: AI tools must adhere to your established BIM workflows and template discipline. No exceptions.

- Enhance Scalable Delivery: Controlled automation allows your delivery pods to handle more volume without sacrificing quality.

- Strengthen Margin Protection: By preventing AI-induced rework, you directly protect the project's bottom line.

This approach ensures that AI serves your production system, rather than letting the chaos of AI dictate your operations.

Your role as a BIM manager is evolving from being the keeper of standards to the architect of a tech-enabled production system. The firms that understand this will thrive; the ones that don't will be left cleaning up the mess.

By building a framework for safe AI adoption, you do more than just mitigate risk. You create a foundation for operational consistency that becomes a key selling point for your firm. You can walk into any client meeting and promise predictability—not because you avoid new technology, but because you manage it with unmatched discipline.

The path to harnessing AI safely begins with a clear plan. It requires a structured approach that prioritizes control, verification, and alignment with your firm's core production values. To help you start building this essential framework, we've developed a checklist that breaks down the key components of effective AI governance in a BIM environment.

Download our BIM AI Governance Checklist to get a practical, step-by-step guide for establishing the rules and processes needed to manage AI with confidence.

Your AI Governance Questions Answered

Even with a solid framework, putting AI governance into practice brings up real, on-the-ground questions. A BIM manager needs straightforward, technical answers to get through this transition without derailing production. Here, we'll tackle some of the most common questions we hear from production leaders tasked with turning abstract policies into concrete workflows.

These aren't just hypotheticals; they're the real-world headaches that come with integrating AI while protecting your firm’s hard-won production maturity and project margins. Our goal is to give you the clarity you need to move forward with confidence.

Where Do I Start With Creating AI Guidelines for My Team?

Keep it simple. The last thing you want is a ten-page document nobody reads. Start with a basic "red, yellow, green" framework that your team can grasp instantly. This isn't about writing a novel; it’s about creating clear, defensible boundaries that map directly to real production risks.

-

Green Tools: These are the no-brainers—fully approved, low-risk AI utilities that won't mess with your model integrity. Think spell-check for annotations or simple data formatting scripts. They’re good to go without any special oversight.

-

Yellow Tools: This is your sandbox. These are promising applications that are still in a pilot phase. They can only be used on a sandboxed project by a designated team, and all outputs must face 100% manual verification before they even touch a live project. This is where you’d test out new generative design or automated modeling tools.

-

Red Tools: These are completely off-limits, no exceptions. This category is usually reserved for public-facing generative AI platforms where uploading project data creates a massive data privacy or intellectual property risk.

Start small, document every rule, and—this is key—link each guideline back to a specific risk you're trying to manage, like protecting template discipline or preventing data leaks. Your first set of guidelines is all about establishing predictable control.

How Can I Audit AI Content Without Tripling My Workload?

You can't. Not manually, anyway. Trying to check every single element an AI tool touches is a recipe for burnout. The sheer volume makes it impossible. Instead, you need to build a system of automated checks and balances to do the heavy lifting.

First, make it a rule: any element created or modified by an AI tool must be flagged with a specific shared parameter or placed on a dedicated workset. It’s a simple step, but it makes all AI-generated content instantly isolatable and filterable within the model.

This isn't just about tracking changes; it's about creating a clear audit trail. When a problem pops up downstream, you need to be able to trace it back to its source—human or machine—in minutes, not days.

Next, lean on your validation tools. Use Dynamo, Ideate Software, or Solibri to run automated checks against your BIM standards. These scripts can scan for non-standard family names, incorrect parameter values, or untagged geometry. This automates the first QA pass, flagging basic data hygiene issues so you can focus your manual review on the things that matter most: constructability and design intent.

What Is the Single Biggest Mistake a BIM Manager Can Make With AI?

Being passive. It’s a mistake that shows up in two equally dangerous ways: either ignoring AI completely and hoping it’s just a fad, or letting teams adopt tools without any central oversight or strategy. Both paths lead to disaster.

Ignoring AI basically makes your role as a technology leader obsolete. On the other hand, allowing unchecked adoption creates a tidal wave of technical debt, project risk, and operational chaos. You'll be the one left to clean it up, usually after significant damage has already been done to project schedules and budgets.

The only way forward is proactive engagement. As the BIM manager, you have to be the one starting the conversation about AI. You need to be testing the tools, defining the risks, and building the governance framework before your teams start experimenting on their own.

By leading the charge, you position yourself as a strategic enabler of technology, not a gatekeeper. You ensure AI is adopted in a way that actually enhances quality, predictability, and profitability—the very pillars your role is built on.

At BIM Heroes, we believe new technology should support disciplined production, not undermine it. We focus on building the systems and providing the support that ensures operational consistency and protects your margins. Learn how our BIM consulting and production services can help you navigate technology shifts with confidence.